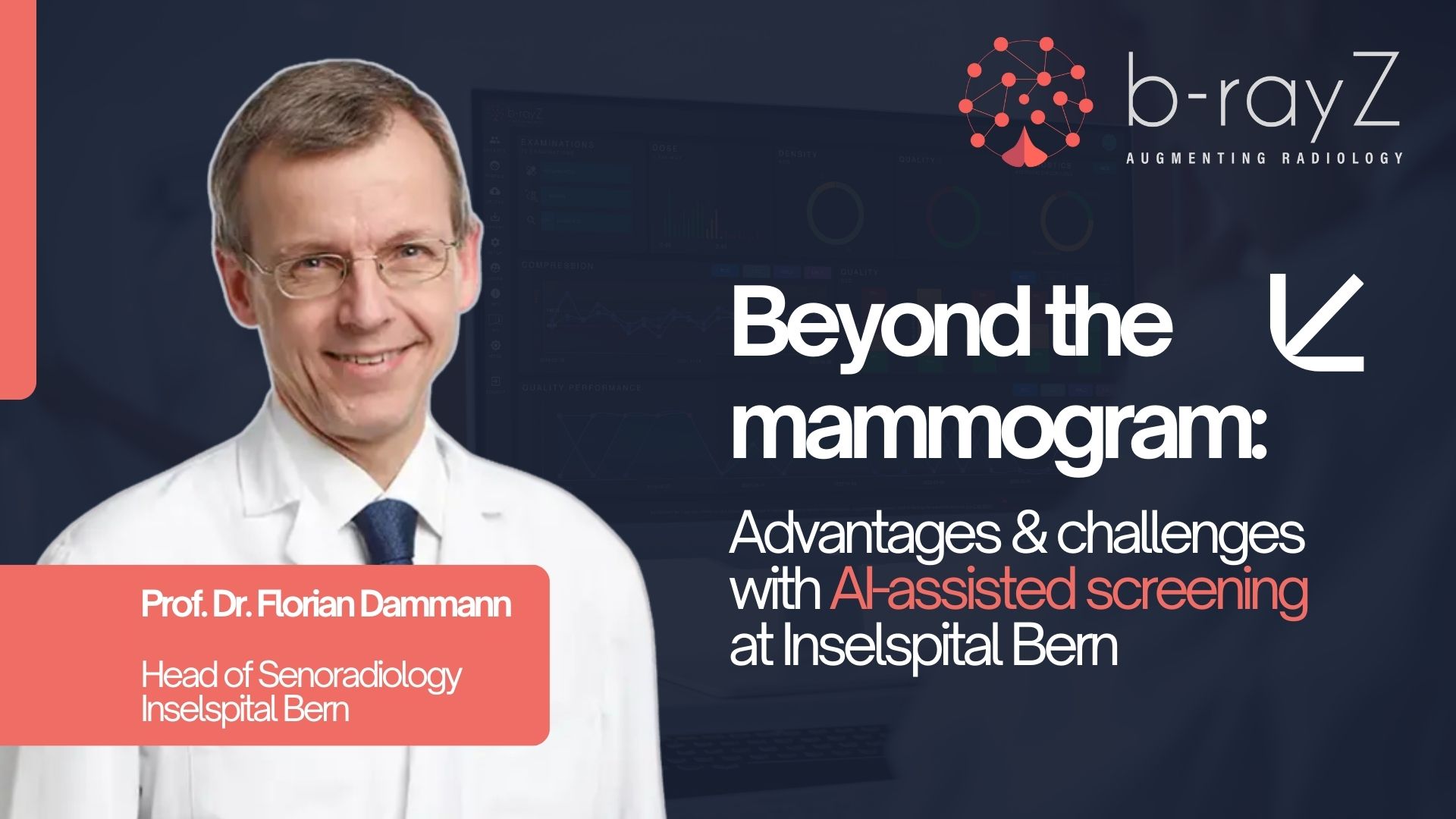

Exploring AI, Image Quality, and the Future of Breast Screening

In the rapidly evolving world of breast cancer screening, one of the most persistent challenges remains the consistent assessment of diagnostic image quality. A new study led by Carlotta Ruppert and Tina Santner, alongside a European research team, takes a critical look at the widely used PGMI (Perfect-Good-Moderate-Inadequate) image quality system and compares it to AI-powered software developed by b-rayZ.

Their multicenter, multireader study analyzed 520 mammograms reviewed by five expert radiographers from three countries – comparing these human assessments against a dedicated AI module. The findings are striking: while human readers showed significant variability, the AI tool demonstrated comparable – and in some areas superior – consistency and performance. One thing becomes clear: b-rayZ sets new standards in objectivity and reliability by consistently applying rules without bias. At the same time, it also opens up new conversations about the role of expert interpretation. As with any intelligent system, real-world context – such as individual examination situations or training intentions – is not always fully represented in data alone. This is where human expertise remains essential: to validate, guide, and apply AI feedback meaningfully. b-rayZ acknowledges this with transparency and is actively shaping a future in which AI and professionals collaborate – not compete. It’s precisely this nuanced and realistic approach that is so valuable and appreciated.

But behind the data are two professionals passionate about elevating care and challenging the status quo. We spoke with Carlotta and Tina to learn more about their work, motivations, and how b-rayZ’s technology is supporting a shift toward smarter, more standardized diagnostics.

Can you tell us a bit about your background and your current roles?

Carlotta:

I am the Lead AI Engineer at b-rayZ. I studied Medical Engineering with a focus on Data Science and have been working in the field of AI applied to medical imaging for the past five years. At b-rayZ, I am responsible for overseeing all AI models related to image quality evaluation and also maintain a broad overview of PGMI systems across Europe.

Tina:

I am a radiographer. When I was a student, I was already interested in breast imaging and over the years I have almost all time been involved with it. I have experienced very different environments at different locations; I have worked in organized screening, but also in diagnostics and assessment, in MRI, in training and continuing education, and on research projects. As part of my PhD, I then embarked on an exciting collaboration with b-rayZ. I am familiar with everyday clinical practice and continue to work in it myself, which allows me to understand and communicate the tasks and challenges, as well as the needs from the perspective of a future user.

What sparked the idea behind this study – and what exactly is PGMI, and why is it still the standard despite its known weaknesses?

Tina:

As a radiographer, I learned how to interpret the quality of mammography images and how important it is to be aware of our responsibility. Reliable diagnosis can only be obtained if we provide clear and meaningful images. PGMI or similar approaches can help us to handle it. These systems consist of a catalog of criteria with individual aspects that must be met for an acceptable image. Depending on this, every view is classified as perfect, good, moderate, or inadequate.

Such analyses are very complex and time-consuming. Despite the precise rules, subjective influences also play a role in the assessment. On the one hand, this is a long-standing and never fully resolved criticism of such systems. On the other hand, human readers can “understand” the entire situation of an individual examination. I would assume AI and humans collaborating in the future. Software can process much more data in a short time, perform rigorous measurements and calculations, while humans can interpret the results and, if necessary, correct them.

Carlotta:

PGMI, which stands for Perfect, Good, Moderate, and Inadequate, is a standardized method used to assess the diagnostic quality of mammographic images. Despite its importance, the application of PGMI varies significantly across countries, which can lead to inconsistencies in image assessment. What motivated this study was the challenge of consistency. By comparing radiographer assessments with AI outputs, we hoped to better understand the limitations of manual scoring and the potential benefits of integrating AI into quality control workflows.

What did you find most surprising in the results?

Carlotta:

I was aware that there’s quite a bit of subjectivity in how image quality is interpreted. Still, I was surprised by just how much variation we saw, especially in the overall PGMI evaluation. One example that stood out was the inconsistency in assessing specific features like the depiction of the inframammary fold.

Tina:

Through the study, we were able to confirm that algorithms can already solve such tasks quite well. This was what I wished for, it was my expectation, but at the beginning of the study, I didn’t know at all what stage of development we were at.

Where did the AI software perform better than expected?

Carlotta:

One area where the AI performed better than I expected was nipple orientation. This feature measured very low inter-reader agreement among humans. I had assumed this would be easier for humans to assess than for an AI model. But I was wrong. One reason the AI likely performed well here is its precision in measurement.

Tina:

What I was very pleased about, but which goes beyond the study, is the user interface. All results are clearly presented, and as an expert user, I so can understand the process, which aspects led to a distinctive score, and even intervene in it. I believe such features could be very valuable for our daily work.

Were there specific features that were particularly difficult for both humans and AI?

Carlotta:

Yes – the three with the lowest agreement across readers and the AI were image symmetry, nipple orientation, and visibility of the inframammary fold.

Tina:

What certainly presents a challenge are images in which certain landmarks are simply not clearly visible, such as the position of the nipples. We must also remember that the breast is soft tissue. The boundaries are not always perfectly clear and distinct. And not to forget the differences in contrast behavior in images of different vendors.

How do you interpret the variation in reader agreement across countries and programs?

Tina:

Such differences were entirely to be expected. Systems like PGMI are always subject to personal influences, even when evaluators carry out their work carefully. PGMI has been around since the 1980s, and a lot has changed since then. Not all countries work with the same system, so there are different cultures and traditions. For the study, we had to establish a common basis, which made the task even more challenging for some of us.

Do you think clinical culture influences how radiographers learn to evaluate quality?

Tina:

Absolutely. Locally at each site, the working atmosphere, communication, throughput, and expectations all shape how quality is perceived. I was quite insecure before my first proper PGMI evaluation, but because this was practiced and communicated so positively, I quickly realized that PGMI could help me in a very concrete way to perfect my technique.

If you were to design the perfect PGMI system today, what would it look like?

Carlotta:

I would start by rethinking the individual quality features. Some, like image symmetry, feel outdated. I would also aim for a more unified interpretation of criteria. Personally, I prefer systems that take a holistic approach – considering how features perform together.

Tina:

The focus should be right. I want an approach that gives radiographers a fair overview of their performance. PGMI should not be there to put each other down, but to promote each other and strengthen the team.

What role do you see AI playing in radiographer education and feedback systems – and how does b-rayZ fit into this vision?

Carlotta:

AI can play a major role in improving standardization and education. It can automate repetitive tasks, provide objective feedback, and help radiographers improve their technique. At b-rayZ, we focus on this intersection of quality control and clinical support. One of our strengths is real-time feedback during acquisition.

Tina:

AI certainly has the potential to provide faster and more objective feedback. This creates the opportunity to provide direct feedback during the examination. It can be particularly helpful during training or for less experienced colleagues not to lose sight in stressful situations. As a senior radiographer, I’m happy to get support and delegate routine tasks and some comprehensive evaluations to software.

What’s next for your research?

Carlotta:

The next step is working toward a more unified PGMI system across Europe. And of course, we will keep improving our AI models.

If you could give one piece of advice to a radiographer just starting out, what would it be?

Carlotta:

Stay open to working with AI and don’t see it as something to fear. It’s here to support, not replace.

Tina:

I can advocate for breast diagnostics. It’s such a relevant, modern, and diverse topic – yet still underrepresented in training. I personally find that unfortunate. We deserve a careful and reliable approach, and it starts with strong, motivated people in the field. The topic also affects me personally as a woman assuming that we wish for and deserve a careful and reliable treatment.